Lead scoring

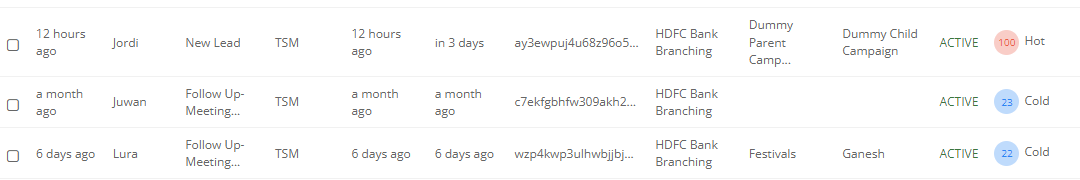

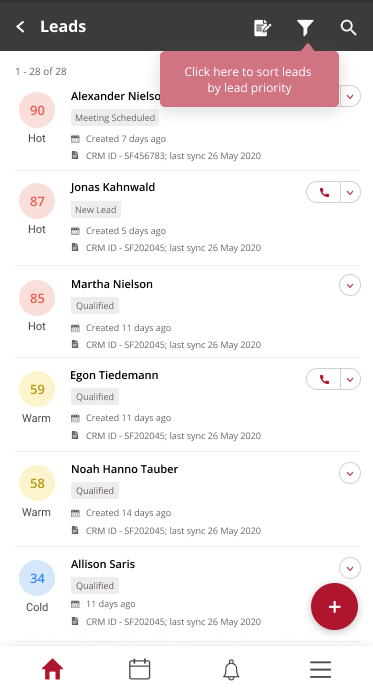

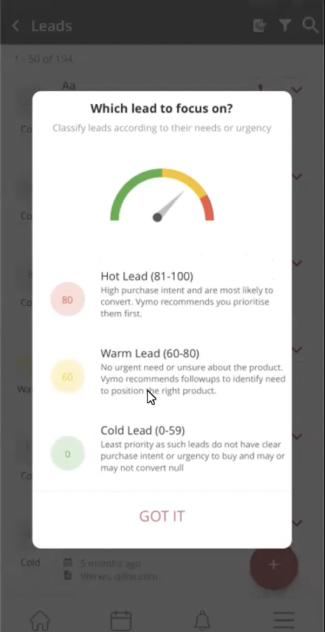

Lead scoring in Vymo involves utilizing machine learning (ML) algorithms to automatically assess and classify leads based on their likelihood of conversion. Leads are categorized into Hot, Warm, or Cold leads, each with a corresponding score ranging from 0 to 100. This process enhances efficiency in lead prioritization and ensures that sales efforts are focused on leads with the highest potential for conversion.

The scoring is done by gauging the details of leads and the leads correlation to high conversion state history. Each lead is given a score (the score is a numeric value ranging from 0 to 100) and the score determines whether the lead is Hot, Warm or Cold lead.

Factors that affect the lead scoring process

Several factors affect the lead-scoring process:

-

Lead Details: Attributes such as Product or Age significantly influence lead scoring, as they demonstrate a strong correlation with lead conversion compared to other attributes like Name, Phone, or Email.

-

Lead State Updates: Scores are recalculated when lead states are updated, and data within each state impact the scores.

-

Model Accuracy: The accuracy of the ML model is crucial for effective lead scoring. Regular model accuracy checks and retraining are essential to maintain optimal performance.

Note

Data that doesn't enter the Vymo gateway has no significance in the lead scoring process.

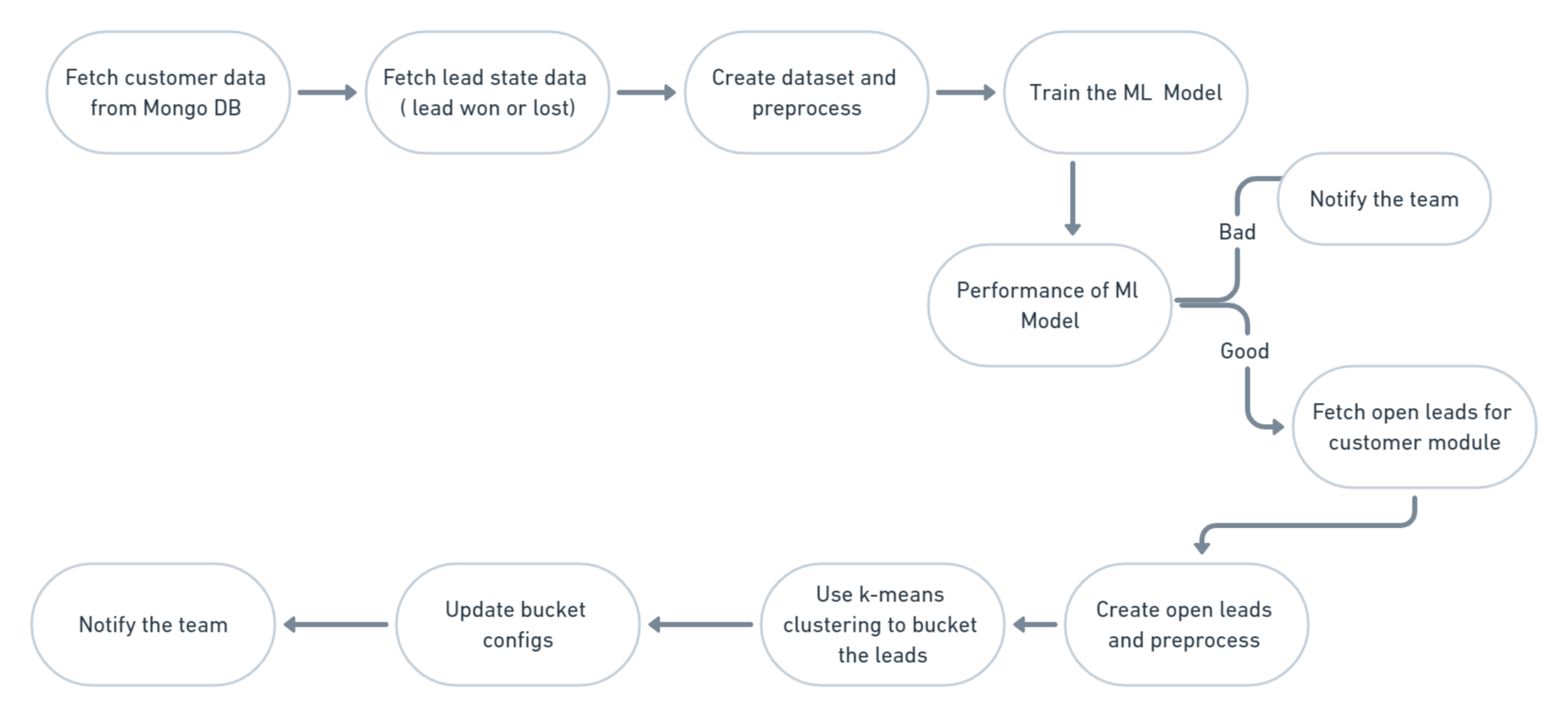

Calculating lead score process

The lead scoring process involves several stages:

-

Data Preparation: This includes data cleaning, normalization, standardization, handling unseen or null values, and feature engineering.

-

Model Inference: Leads are scored based on the trained ML model, considering various attributes and historical conversion data.

-

Model Evaluation: Model predictions are evaluated using accuracy, F1 scores, and AUC metrics.

-

Model Retraining: Fluctuations in model performance due to changes in data distribution or business models necessitate periodic retraining of the ML model.

Flowchart describing ML training pipeline

Eligibility criteria for lead scoring

| For module | For company |

|---|---|

| For a module to be eligible for lead scoring, metrics such as Accuracy, F1 Scores, and AUC must exceed 85. | Company data determines eligibility for lead scoring. |

Note

Accuracy, F1 Scores, and AUC are common metrics used to gauge the performance of model in machine learning.

Retraining ML model

The ML model deployed in production requires regular monitoring and retraining. Factors such as changes in campaigns, data distribution, or business models may impact model performance, necessitating retraining.

- When the client/server configures a new campaign or addition or deletion of a new lead attribute.

- When there is alteration in data distribution or in workflows because of seasonal variations.

- New business models leading to change in the user targets of the company affects the model performance.

Keeping a track of preceding changes, monitoring the ML model performance in production and retraining the ML model is obligatory.

Some questions and answers

- How often is the model trained?

- Monthly

- How do you keep track of model prediction accuracy?

- Through the MySQL table

- How can you prove that the hot leads are indeed converting more of them warm or cold leads?

- Through the monitoring pipeline.

- How are hot, warm, and cold ranges determined?

- Through K means clustering.

- How quickly are the scores computed?

- From 30 minutes to an hour. The lead scores are not live scores.

- Are these customizable?

- Yes, through the self serve but isn't recommended.

- For companies that are ineligible, is there any way to manually score leads?

- Yes, through API.

- When do you identify that the model needs to be retrained?

- If the model performance is bad while scoring the new data and doesn't match the accuracy rate, then the model needs to be retrained.